BrainVoyager QX v2.8

Time Course Normalization

A problem when analyzing raw fMRI data is that the level of the signal may substantially differ between voxels caused by physical and physiological aspects of MRI scanning. While GLM results (i.e. significance of betas and contrasts) are not affected by the level of the signal, this constitutes a problem when comparing effect sizes across voxels and it is an even more importmant problem for multi-subject analyses [LINK] since the signal level may be substantially different at corresponding voxels across subjects. In order to achieve better comparison across voxels (and subjects), raw fMRI time course data is usually transformed by percent signal change or (baseline) z-normalization.

Assume that subject 1 has a mean baseline value of 2000 and a mean value in condition j of 2050 in the raw fMRI data in a voxel of the fusiform face area (FFA); assume further that the subject has a mean baseline value of 1000 in a voxel of the parahippocampal place area (PPA) and a mean condition value of 1030. While the FFA voxel exhibits a greater absolute effect (50) than the PPA voxel (30), the percent change is actually smaller in the FFA voxel (2.5%) than in the PPA voxel (3%). When considering percent signal change, we would draw the opposite conclusion about which voxel was more active!

Percent Signal Change

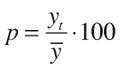

Since it is believed that the fMRI signal changes scale with the level of the measured signal, a percent signal change (psc) transformation of each value with respect to the voxel time course mean value is usually performed before statistical analysis:

i.e. the signal y in a voxel at each time point i is divided by the mean of the voxel signal time course followed by multiplication by 100. With this transformation, the mean value of the time course will be the same (100) for all voxels (and subjects in a multi-subject analysis) and individual values of the time course are expressed as percent changes around that mean. Percent signal transformation is used as default in BrainVoyager QX and represents the normalization scheme most widely used in the neuroimaging community. BrainVoyager performs time course normalization implicitly when running a GLM (if the option is turned on). As an alternative, one could save the transformed time course to disk (e.g. with a plugin) and use the data as input for a GLM without signal time course normalization. Running it implicitly during GLM calculation is chosen in BrainVoyager since it saves disk space and allows to re-run the same analysis with other normalization options (see below) easily. Note that it is also possible to run a GLM on native data, e.g. to see absolute effect sizes expressed in raw units, and to express the estimated betas as percent signal change by relating them to the estimated value of the constant term (b0).

(Baseline) Z Standardization

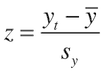

Another popular normalization approach is z transformation:

i.e. the signal in a voxel is mean-centered (subtraction of mean) and related to the standard deviation of the signal fluctuations. With this transformation, signal fluctuations from time point to time point are expressed as units of standard deviation. Assuming Gaussian errors, this allows easy appreciation of the deviation of a single value (or a mean effect) as in standard statistics, e.g. a value of -2.5 is 2.5 standard deviations below the mean value. Note, however, that z normalization calculated this way operates on all values of the voxel time course, including baseline and stimulus epochs. In order to maintain comparability of estimated effect sizes across voxels one can restrict the data points to baseline epochs when calculating the standard deviation and mean. This z normalization approach is called baseline z normalization in BrainVoyager. Baseline z normalization seems to be interesting for multi-subject mixed-effects summary statistics analyses since it helps to keep the variance per subject as similar as possible (LINK)

Both percent signal change and (baseline) z normalization work rather well and it is difficult to recommend one over the other. Since percent change normalization is more widespread in use, BrainVoyager uses this normalization scheme as default. Experience seems to indicate that (baseline) z normalization appears to provide more robust results at least in some cases, and if time allows, it might be good to run GLMs with both kinds of normalization schemes and to check whether results can be compared across voxels in a qualitatively similar way.

Copyright © 2014 Rainer Goebel. All rights reserved.